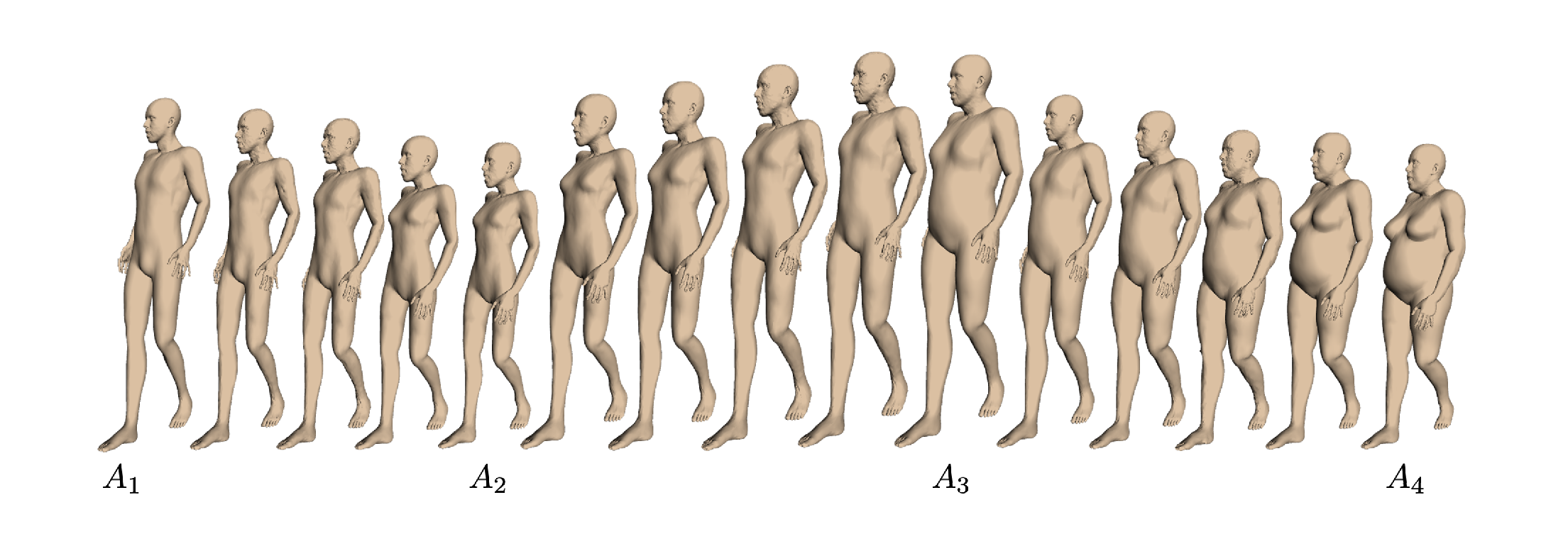

AnthroNet with Poses. The figure shows posed meshes obtained by applying LBS to generated meshes on conditionals computed by piece-wise linear interpolation between four sets of full anthropometric measurements ($A_1$, $A_2$, $A_3$, $A_4$).

Francesco Picetti,

Shrinath Deshpande,

Jonathan Leban,

Soroosh Shahtalebi,

Jay Patel,

Peifeng Jing,

Chunpu Wang,

Charles Metze III,

Cameron Sun,

Cera Laidlaw,

James Warren,

Kathy Huynh,

River Page,

Jonathan Hogins,

Adam Crespi,

Sujoy Ganguly,

Salehe Erfanian Ebadi

Unity Technologies

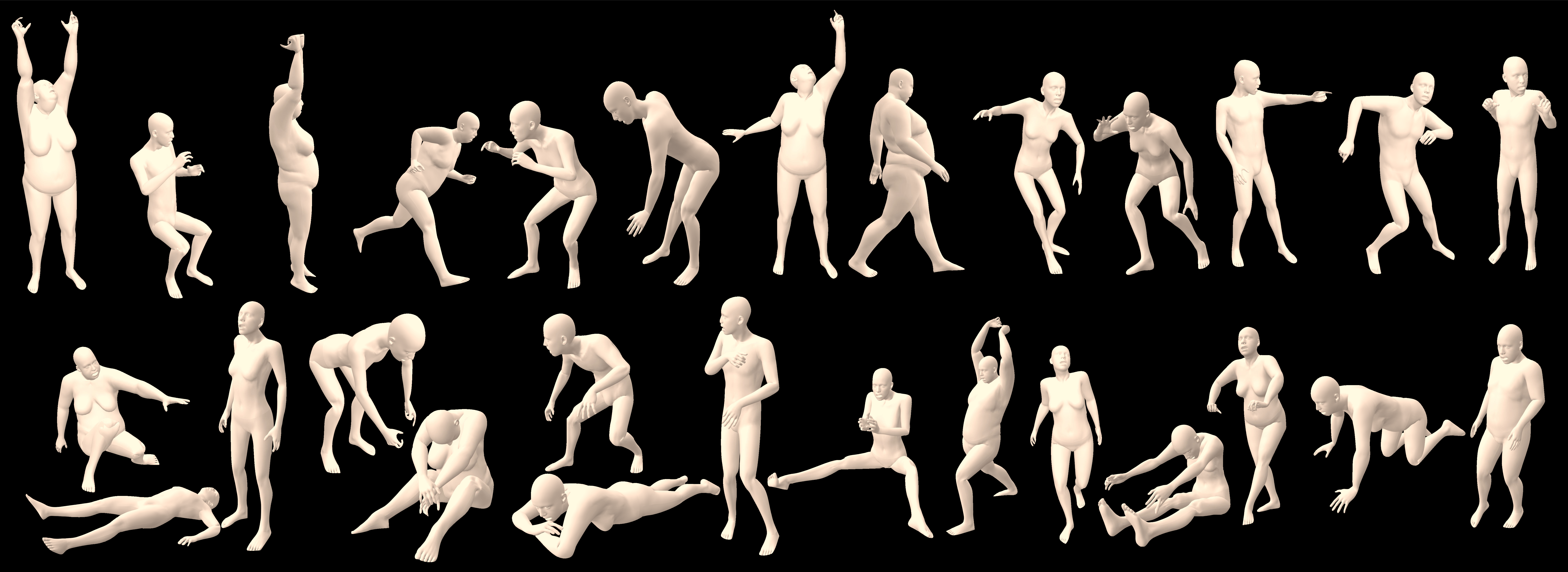

Animated AnthroNet Examples

Abstract

We present a novel human body model formulated by an extensive set of anthropocentric measurements, which is capable of generating a wide range of human body shapes and poses. The proposed model enables direct modeling of specific human identities through a deep generative architecture, which can produce humans in any arbitrary pose. It is the first of its kind to have been trained end-to-end using only synthetically generated data, which not only provides highly accurate human mesh representations but also allows for precise anthropometry of the body. Moreover, using a highly diverse animation library, we articulated our synthetic humans’ body and hands to maximize the diversity of the learnable priors for model training. Our model was trained on a dataset of 100k procedurally-generated posed human meshes and their corresponding anthropometric measurements. Our synthetic data generator can be used to generate millions of unique human identities and poses for non-commercial and academic research purposes.

Summary

- AnthroNet provides a novel expressive high resolutions model of human body shape conditioned on anthropomorphic measurements.

- AnthroNet offers two efficient registration pipelines that enable a bi-directional conversion of SMPL and SMPL-X meshes into AnthroNet meshes.

- Our synthetic data generator which can produce high resolution meshes with their associated metadata is publicly available for non-commercial and academic research use.

Model Architecture

AnthroNet's end-to-end trainable pipeline. We discard the Mesh Encoder in the Mesh Generator block at inference time. Then we decode the estimated mesh in the bind pose $\tilde{\mathcal{X}}^A_b$ using a latent vector $Z$ sampled from a normal distribution combined with the encoded anthropometric measurements $A$. The mesh Skinner and Poser block predicts the pose and shape corrective offsets $\Delta \tilde{\mathcal{X}}^A_{\bar{\theta}}$ required to animate the mesh into the desired pose $\bar{\theta}$ and produce a fully skinned, rigged, and posed mesh $\tilde{\mathcal{X}}^A_{\bar{\theta}}$ with the provided measurements $A$.

Posed Synthetic Humans Dataset

Examples from our multi-subject and multi-pose synthetic dataset.

Our synthetic data generator

is publicly available for academic research and can be used to create millions of

unique human identities in arbitrary poses provided by the user.

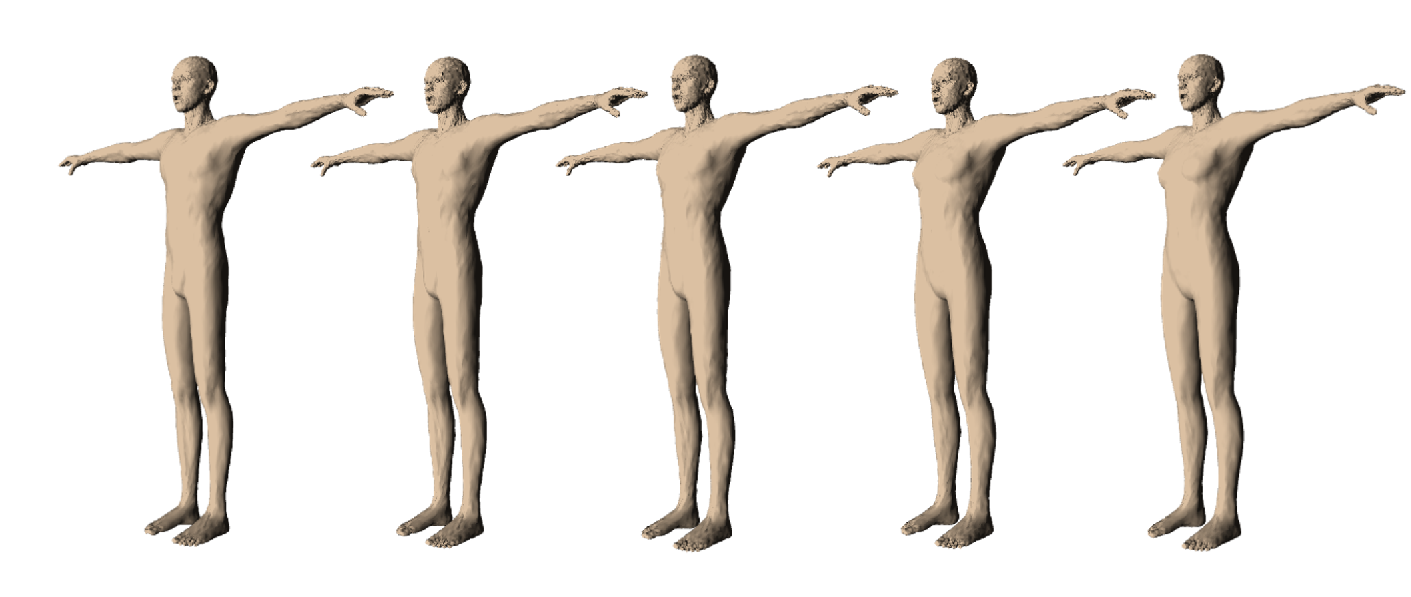

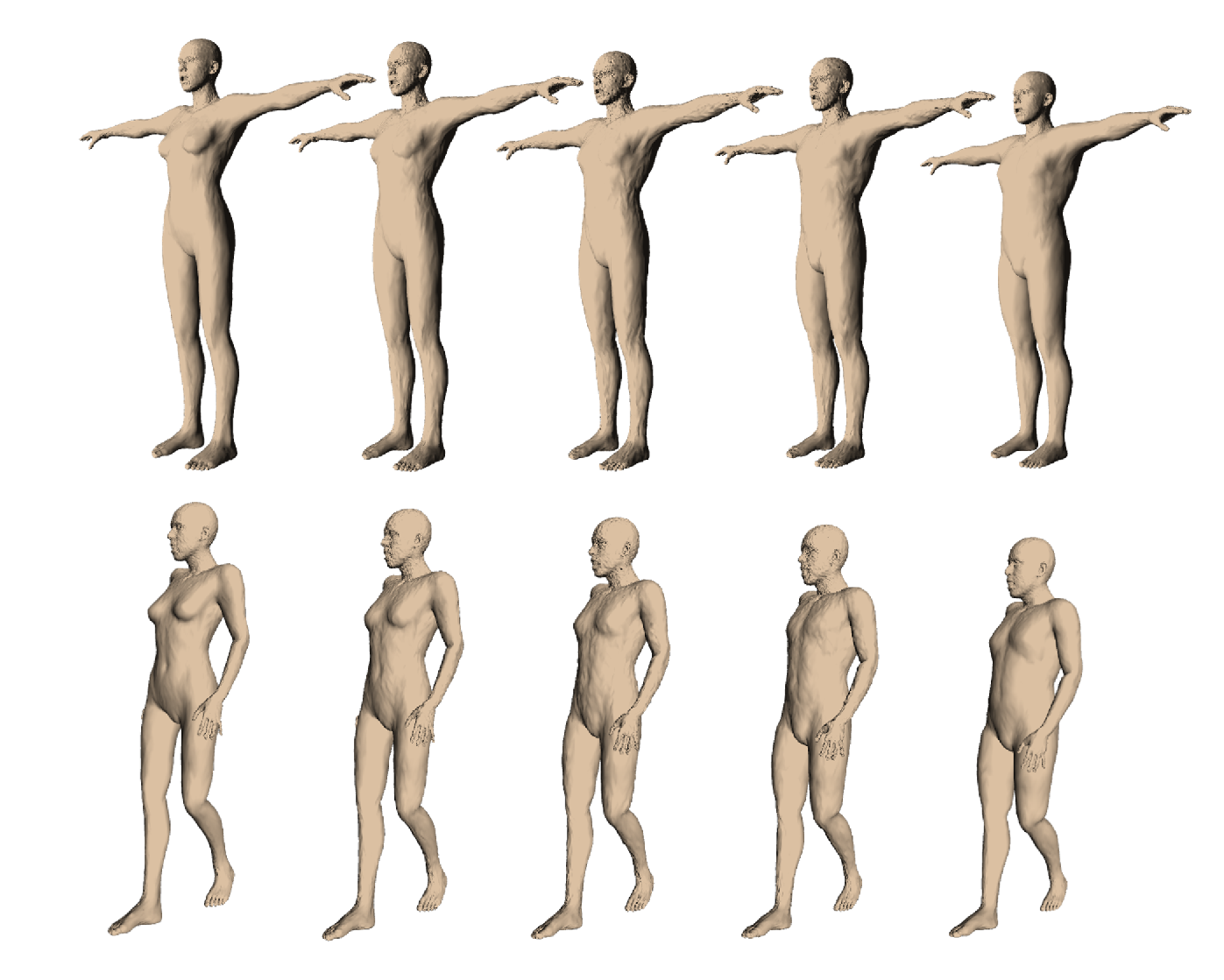

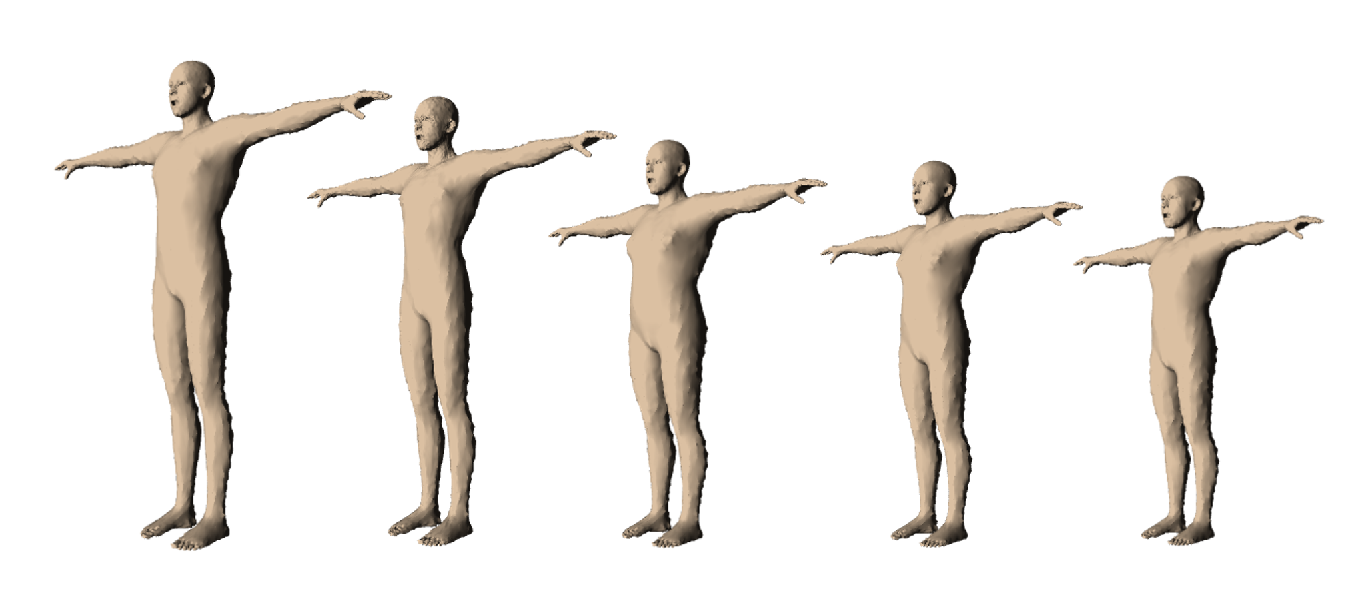

Interpolations

Human meshes generated by AnthroNet conditioned on measurements and sex. As depicted in the first figure, a mesh is generated using conditional $A_t$, which is obtained from the linear interpolation between two measurement vectors ($A_1$, $A_2$).

Lean to curvy; keeping the same height and sex.

Male to female; keeping the same anthropometric measurements.

Tall female to short male.

Tall to short; same sex and chest-to-waist circumference ratio.

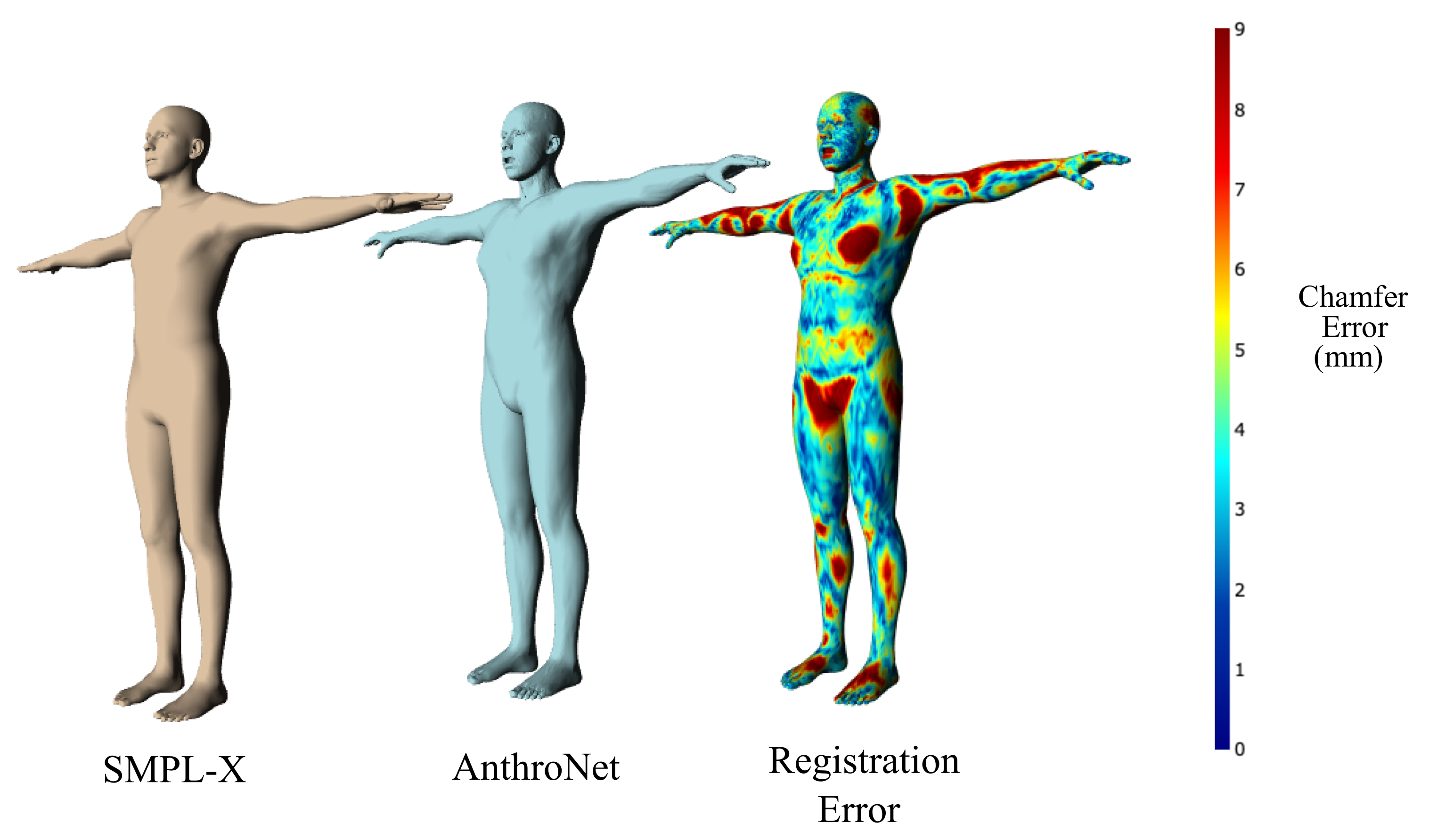

Registration Error

Illustration of Registration Error when AnthroNet is fitted to a SMPL-X mesh.

A large portion of error attributes are due to the fact that SMPL-X meshes are registered on humans wearing tight

clothing, whereas our synthetic humans are without clothes.

Citation

@inproceedings{picetti2023anthronet,

title={AnthroNet: Conditional Generation of Humans via Anthropometrics},

author={Francesco Picetti and Shrinath Deshpande and Jonathan Leban and Soroosh Shahtalebi and Jay Patel and

Peifeng Jing and Chunpu Wang and Charles Metze III au2 and Cameron Sun and

Cera Laidlaw and James Warren and Kathy Huynh and River Page and

Jonathan Hogins and Adam Crespi and Sujoy Ganguly and Salehe Erfanian Ebadi},

year={2023},

eprint={2309.03812},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Related links

- Unity’s Perception Package: Perception toolkit for sim2real training and validation in Unity

- Unity Synthetic Humans: A package for creating Unity Perception compatible synthetic people

- PeopleSansPeople: Unity’s privacy-preserving human-centric synthetic data generator

- Unity Computer Vision

License

AnthroNet is licensed under a NON-COMMERCIAL SOFTWARE LICENSE AGREEMENT. See LICENSE for the full license text.